designs that sense

|

What if static visual product designs could dynamically change their appearance as a function of the customer’s movement or state?

In an everyday amble through a supermarket there are thousands of products arrayed before you, each designed to be noticed. But now imagine, if you will, a future where each product is able to sense you, and use the information to dynamically modulate its appearance. Imagine products that change their look as a function of your distance from it, of your direction relative to it, or even of your appetitive state (such as whether you are, say, thirsty).

To implement such a thing in conventional gizmo terms, one might imagine each product having a little LCD screen, a camera and recognition software for sensing passerbys, and perhaps the ability to sense nearby mobile phones. And even still there’d be no way to sense that the passing shopper is thirsty – to do that one would need to get the shopper to wear a trendy EEG cap, which would, ahem, reliably transmit customer needs to the nearby product computers.

But what if there is a less gadgety way to achieve this sort of smart, dynamic signage? What if all that is needed are illusions – static, painted on, stimuli – of the right sort?

There are, in fact, illusions that change their appearance based on the properties I mentioned above.

At the Human Factory we have, for example, invented ambiguous stimuli that are seen in one way when you are thirsty, and seen another way when you are not thirsty. These are state-dependent illusions. (See this paper.)

And we invented the first visual designs able to "trick" the visual system into carrying out computations, with hosts of potential long range applications. You can read about it here (the published paper is here).

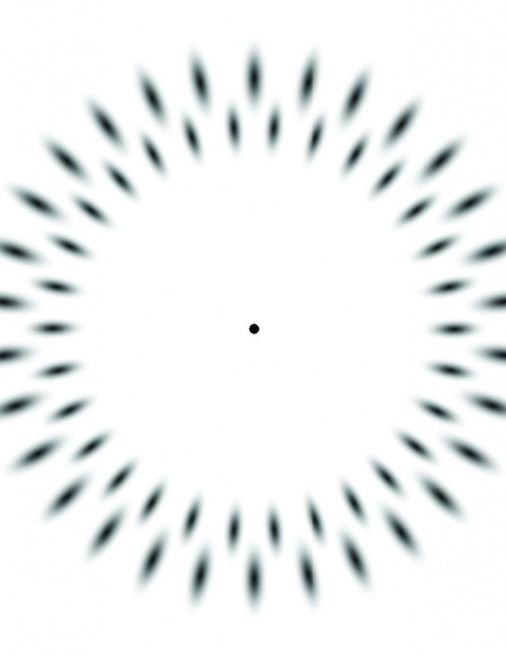

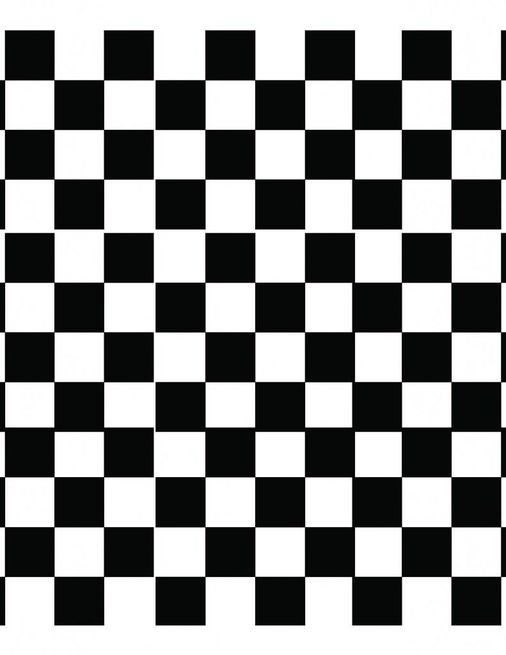

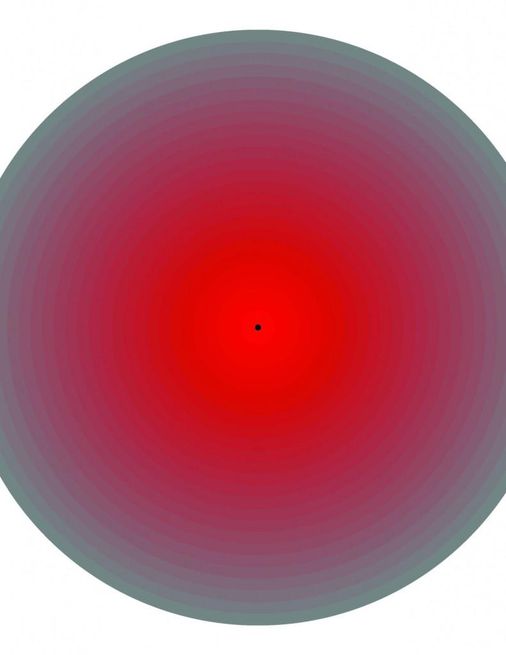

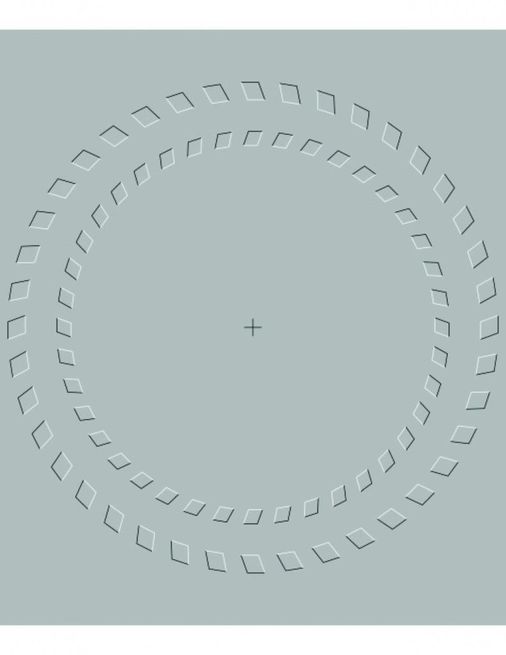

We can also employ static visual stimuli that dynamically change their appearance as the customer moves. Loom toward any of the images below and see what happens. The caption for each (once you've clicked on the image) describes it in more detail.

We at the Human Factory are also behind two other major discoveries on the meaning of visual stimuli.

One concerns the meaning of colors, that they're about emotions and state on bare skin of others. Knowing this, one has clearer principles guiding one's color choices for logos and other designs. See this paper, and especially chapter 1 of our earlier book, VISION rEVOLUTION.

Another concerns why we see illusions, and that radiating lines are visual cues of you moving forward. By understanding this, we can add vibrancy and motion to static stimuli. See this TED talk by our founder on the topic.

By understanding what visual stimuli mean to your brain, we at the Human Factory can design smarter logos, smarter package designs, smarter promotional materials, etc.

In an everyday amble through a supermarket there are thousands of products arrayed before you, each designed to be noticed. But now imagine, if you will, a future where each product is able to sense you, and use the information to dynamically modulate its appearance. Imagine products that change their look as a function of your distance from it, of your direction relative to it, or even of your appetitive state (such as whether you are, say, thirsty).

To implement such a thing in conventional gizmo terms, one might imagine each product having a little LCD screen, a camera and recognition software for sensing passerbys, and perhaps the ability to sense nearby mobile phones. And even still there’d be no way to sense that the passing shopper is thirsty – to do that one would need to get the shopper to wear a trendy EEG cap, which would, ahem, reliably transmit customer needs to the nearby product computers.

But what if there is a less gadgety way to achieve this sort of smart, dynamic signage? What if all that is needed are illusions – static, painted on, stimuli – of the right sort?

There are, in fact, illusions that change their appearance based on the properties I mentioned above.

At the Human Factory we have, for example, invented ambiguous stimuli that are seen in one way when you are thirsty, and seen another way when you are not thirsty. These are state-dependent illusions. (See this paper.)

And we invented the first visual designs able to "trick" the visual system into carrying out computations, with hosts of potential long range applications. You can read about it here (the published paper is here).

We can also employ static visual stimuli that dynamically change their appearance as the customer moves. Loom toward any of the images below and see what happens. The caption for each (once you've clicked on the image) describes it in more detail.

We at the Human Factory are also behind two other major discoveries on the meaning of visual stimuli.

One concerns the meaning of colors, that they're about emotions and state on bare skin of others. Knowing this, one has clearer principles guiding one's color choices for logos and other designs. See this paper, and especially chapter 1 of our earlier book, VISION rEVOLUTION.

Another concerns why we see illusions, and that radiating lines are visual cues of you moving forward. By understanding this, we can add vibrancy and motion to static stimuli. See this TED talk by our founder on the topic.

By understanding what visual stimuli mean to your brain, we at the Human Factory can design smarter logos, smarter package designs, smarter promotional materials, etc.